Benchmark of the iFigure AI Agent in the Medical Domain

Objective

The objective of this benchmark was to evaluate the ability of the iFigure AI agent to provide accurate answers to medical-domain questions. Particular attention was given to assessing the impact of the Retrieval-Augmented Generation (RAG) mechanism on response accuracy.Experimental Configuration

1. Base Model

The agent was built upon the following large language model (LLM):

This is a compact, quantized (Q4_K_M) version of a 14-billion-parameter model optimized for

efficient inference.

2. Dataset

The evaluation employed the dataset:

This dataset contains medical-domain questions and reference answers and is specifically

designed to assess reasoning capabilities within the medical domain.

A random subset of 199 questions was selected for the benchmark.

3. Search Infrastructure

The AI agent had access to the Kavunka search engine. Its index included:

- specialized medical websites,

- pharmaceutical resources,

- and non-medical websites.

Thus, retrieval was performed in a realistic and noisy environment, increasing the

strictness and ecological validity of the experiment.

Testing Scenarios

Two experimental configurations were evaluated:

-

Test A — Without RAG

- The agent did not perform any search queries.

- Responses were generated solely based on the knowledge embedded within the LLM.

-

Test B — With RAG (Without PWG)

- The agent performed search queries using the Kavunka engine.

- Responses were generated based on retrieved information (retrieval + generation).

- The PWG (Post-Word Generation rollback) mechanism was disabled.

Rationale for Excluding PWG

PWG (Post-Word Generation rollback) involves reverting generation when “unresolved” tokens are detected and

attempting to regenerate the response. However, preliminary testing demonstrated that:

- the improvement in answer accuracy was negligible,

- response latency increased significantly,

- additional rollback and token regeneration cycles were required.

Therefore, PWG was excluded from the final experimental configuration.

Evaluation Methodology

Each generated response was compared with the reference answer using the BERT F1 (bert_f1 rank) metric.

Responses were sorted:

- from most accurate (maximum bert_f1),

- to least accurate (minimum bert_f1).

Metric Interpretation

- bert_f1 > 0.9 — the response is considered correct.

- Higher values indicate closer semantic alignment with the reference answer.

Results

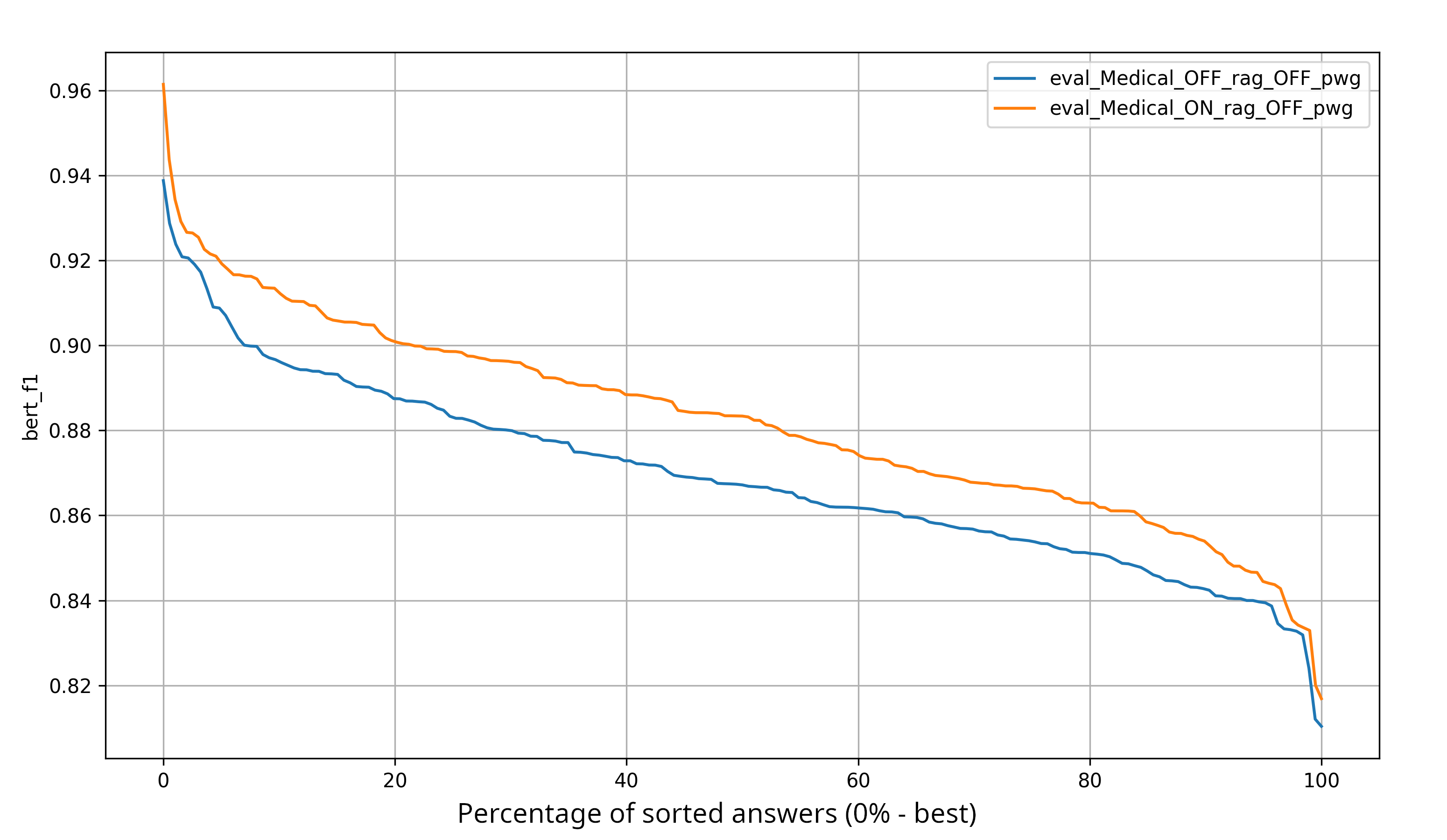

The results are presented as two curves:

- Blue curve — model without RAG and PWG

- Orange curve — model with RAG, without PWG

The curves are sorted in descending order of answer quality.

Observations

- Across the entire distribution, the orange curve consistently lies above the blue curve.

- The number of responses with bert_f1 > 0.9 is significantly higher when RAG is used.

- The difference is particularly pronounced in the upper segment (highest-quality answers).

- In the lower-quality tail of the distribution, RAG also demonstrates a stable advantage.

Key Findings

1. RAG Significantly Improves Accuracy

The integration of retrieval mechanisms substantially increases the number of correct answers

(bert_f1 > 0.9).

This is particularly critical in the medical domain, where:

- high accuracy is required,

- factual correctness is essential,

- hallucinations are unacceptable.

2. Search Access Compensates for LLM Limitations

Even a 14B-parameter model without access to up-to-date external sources:

- demonstrates lower accuracy,

- more frequently deviates from reference answers.

RAG enables the system to:

- rely on relevant external sources,

- refine factual information,

- reduce the probability of generative errors.

3. PWG Is Not Justified Under the Given Configuration

Since quality improvements were minimal while response latency increased significantly,

the use of PWG in this medical QA scenario is not justified.

Conclusion

The benchmark demonstrates that integrating RAG into the iFigure AI agent:

- substantially improves response quality,

- significantly increases the proportion of correct answers,

- enhances system suitability for medical-domain applications.

The results confirm that in domain-specific fields such as medicine, the combination of an LLM with a retrieval

mechanism represents a considerably more effective architecture than using an LLM in isolation.